NVIDIA GTC (GPU Technology Conference) 2019, one of the biggest events in Silicon Valley, hosted approximately 9,000 attendees and showcased new products and technologies from NVIDIA partners and affiliates from all over the world. Several large manufacturing companies, automakers, mapping companies, successful start-ups participated in the sessions conducted during the event. The major focus of these sessions was on the use of NVIDIA technologies in research and development across industries such as entertainment, medical science, the arts, machine learning, data science, artificial intelligence and autonomous driving.

For the contest, NVIDIA requested research posters from academia and industries to demonstrate work products using Graphics Processing Units (GPUs). Submissions needed to provide actual or expected results/accomplishments and needed to demonstrate a significant innovation or improvement using Graphics Processing Unit (GPU) computing. HARMAN was selected to present in “Autonomous Vehicles” category. The team that participated from HARMAN, Automotive Artificial Intelligence group, Richardson, Texas included DR. Behrouz Saghafi, Dr. Mengling Hettinger and Nikhil Patel.

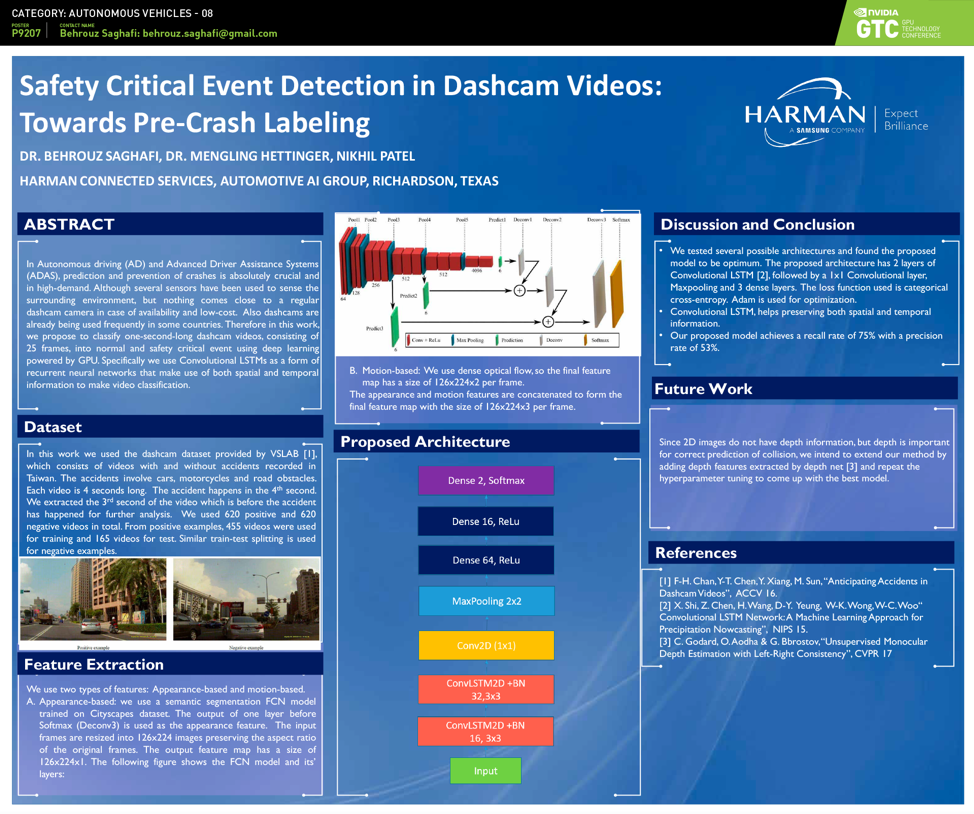

HARMAN’s research was focused on ‘Safety Critical Event Detection in Dashcam Videos: Towards Pre-Crash Labeling.’ One of the essential functions of Autonomous Driving systems and Advanced Driver Assistance systems (ADAS) is predicting and preventing crashes. Processing sensor data to understand the surrounding environment is effective but HARMAN’s team proposed an alternative to it. The alternative would use a regular dashcam camera making it a more cost-effective solution. Dashcams are already used by vehicle owners worldwide, hence, it is easy to implement.

The HARMAN proposed model would classify one-second-long dashcam videos, consisting of 25 frames, into normal and safety critical events using deep learning powered by GPU. It would specifically use Convolutional Long Short-term Memory Networks (LSTMs) as a form of recurrent neural networks that make use of both spatial and temporal information to make video classification. To propose this model, the team studied 620 positive and 620 negative videos in total. From positive examples, 455 videos were used for training and 165 videos for test. The proposed model achieved a recall rate of 75% with a precision rate of 53%.

The team believes that depth information, which is usually not available in 2D images, is important for correct prediction of collision. HARMAN intends to extend the proposed method by adding depth features and repeat the hyperparameter tuning to come up with the best model.

Check out our award-winning poster to see how our researchers are using GPU-based supercomputing to develop safe and secure connected vehicles.

You can also view it on the NVIDIA GTC website.

For more information, do write to us here or on LinkedIn/Twitter.